Point- and lower-bound confidence estimates on the completeness (or recall) of an e-discovery production are calculated by sampling documents, from both the production and the remainder of the collection (the null set). The most straightforward way to draw this sample is as a simple random sample (SRS) across the whole collection, produced and unproduced. However, the same level of accuracy can be achieved for a fraction of the review cost by using stratified sampling instead. In this post, I introduce the use of stratified sampling in the evaluation of e-discovery productions. In a later post, I will provide worked examples, illustrating the saving in review cost that can be achieved.

Sampling aims to estimate characteristics of a population by looking at a sample of that population. In simple random sampling, we sample from the population indifferently; each element of the population (and each combination of elements) has the same probability of being included. By contrast, in stratified sampling, we divide the population up into disjoint sets or strata; sample from each stratum separately; and combine the evidence from each stratum into an overall estimate.

Stratified sampling improves estimate accuracy in two ways. First, if the characteristic of interest (in e-discovery, the proportion of responsive documents) differs systematically between sub-populations, then accuracy is gained by estimating the characteristic for each sub-population separately, and then aggregating it for the population as a whole. The increase in accuracy comes because we substitute lower within-sub-population for higher between-sub-population sampling error.

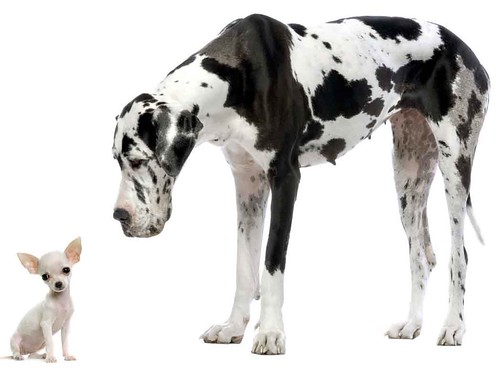

Say, for example, we're estimating the average weight of a mixed herd of great danes and chihuahuas. In stratified sampling, we sample from the great danes and from the chihuahuas separately, and only have to worry about whether the sampled great danes are representative of all great danes, and the sampled chihuahuas of all chihuahuas. In simple random sampling, we sample dogs indifferently, and then have to worry about whether the sampled dogs are representative of dogs in general. Happening unawares to sample more great danes than chihuahuas will hurt our estimate a lot; happening by chance to sample more heavy chihuahuas than light chihuahuas will hurt our estimate only a little.

Stratified sampling offers an additional advantage if sub-populations have differing homogeneities. The more heterogeneous a sub-population, the less accurate a sample-based estimate. We can achieve greater accuracy for the same sample budget by sampling the more heterogeneous sub-population at a higher rate than the more homogeneous sub-population. If our chihuahuas all have similar weights, while the great danes are more variable, then we're better off devoting more samples to the great danes, and fewer to the chihuahuas.

How does all of this apply to production evaluation in e-discovery? Here, we have two clearly defined sub-populations: the (candidate) production and the null set. These sub-populations differ significantly in the characteristic of interest, namely the proportion of responsive documents: the production is likely to be dense with them, the null set vary sparse. The null set is also far more homogeneous, being overwhelmingly non-responsive, and so we can sample it more lightly and assign the saved annotations to the more heterogeneous production. (*)

We can go further and divide the collection into more strata than just the produced and null sets. Most contemporary predictive coding systems are able to rank documents by probability of responsiveness, with the production being determined by truncating the ranking at some cutoff. The documents in the null set just below this cutoff are more likely to be responsive than the documents further down. Therefore, it makes sense to divide the null set into two (or more) strata based on this ranking, and sample the upper null set more densely than the lower null set---to look, in essence, for responsive documents missed by the production where we are more likely to find them.

Finally, we require a method of estimation, not just of point estimates, but of confidence intervals. For simple random sampling, the simple binomial confidence interval is used, on the proportion of sampled responsive documents that happen to fall in the production. Interval estimation for stratified sampling is more complex, which has perhaps deterred practitioners to date. However, a method of estimating confidence intervals on recall from stratified sampling is described and validated in my recently published ACMTOIS article, Approximate Recall Confidence Intervals (January 2013, Volume 31, Issue 1, pages 2:1--33) (free version in arXiv). In a later posting, I will provided worked examples with the two sampling and interval methods, and illustrate the significant cost savings that stratified sampling offers.

(*) In practice, predicting the optimal sample allocation between null set and production set is more complicated than just observing which has the higher prevalence, since the production set yield occurs twice in the recall formula (one as numerator, one as denominator), and the null set yield is a divisor, not an added term, in the formula. We will examine this issue in more detail in a later post.

Well done. I could easily understand it all. And I love the dog examples.

[...] invaluable, including such esoteric topics as Gaussian and Binomial calculations, Simple Random and Stratified Random sampling (William’s speciality), quality control sampling for testing, as opposed to training, [...]

[...] in order to achieve the same level of reliability. One approach to drawing such a sample is stratified sampling; and I hope shortly to give a worked example showing just how dramatic the savings can [...]

"Therefore, it makes sense to divide the null set into two (or more) strata based on this ranking, and sample the upper null set more densely than the lower null set---to look, in essence, for responsive documents missed by the production where we are more likely to find them."

I think that this is a promising intuitively sensible approach.

In addition, this ranking metadata can be used in combination with other document metadata attributes, especially for communication documents, to provide a basis for applying non-content, metadata-based machine learning predictive classifiers as a supplemental systematic method to better detect miss-classified (as non-relevant) documents. However, this is most effective if relevant documents are coded on a gradient.

Question:

Given the very low prevalence found in ediscovery corpi generally, the margin of error commonly used can create (in relation to the actual number of relevant documents in the set) an estimated range of relevant document set size that varies significantly. Do you have a recommendation on how to select the point estimate (of either prevalence or recall as the case may be) within the range to use, given the objectives of ediscovery?

Gerard,

Hi! For prevalence, the "natural" (maximum likelihood) point estimate is biased, and should I think be used. That is, if 1% of the sample is responsive, use the point estimate that 1% of the collection is responsive, too.

For recall, under the segmented sampling scheme (that is, where the retrieved and unretrieved segments of the population are separately sampled), the "natural" point estimate (viz., estimated number responsive and retrieved divided by total estimated number responsive) is biased (as I show in my paper on "Approximate Recall Confidence Intervals"). In most cases, though, the bias will be small, and in any case, I'm not aware of (and haven't had time to develop!) an unbiased estimator, so I think one is for the time being stuck with the biased one.

William